How to Measure FLOP/s for Neural Networks Empirically? – Epoch

4.5 (280) · $ 10.00 · In stock

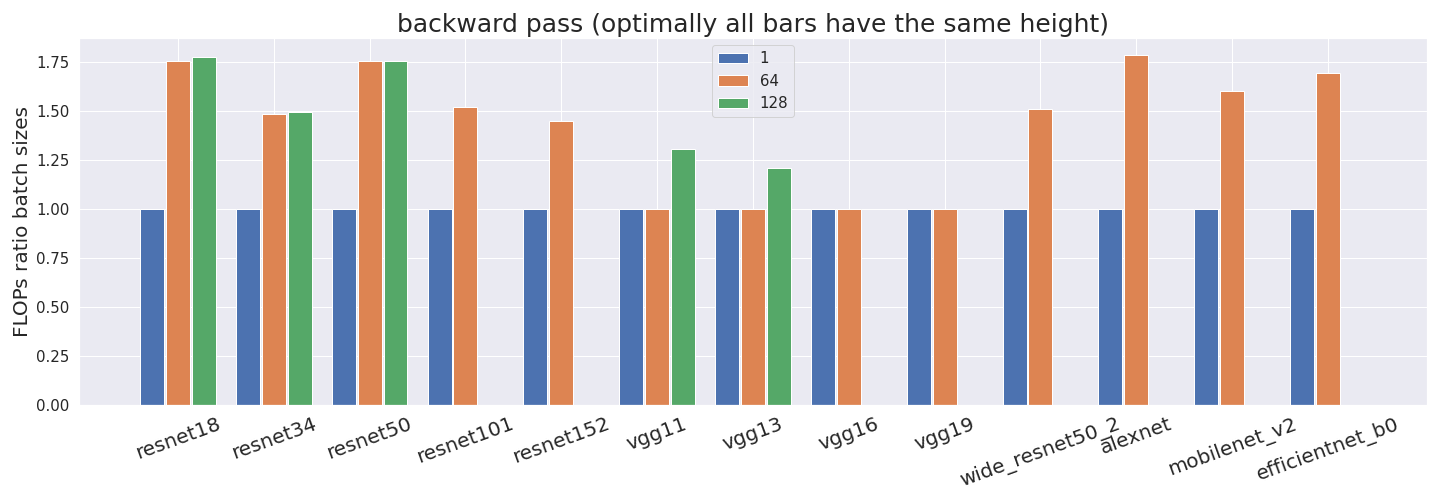

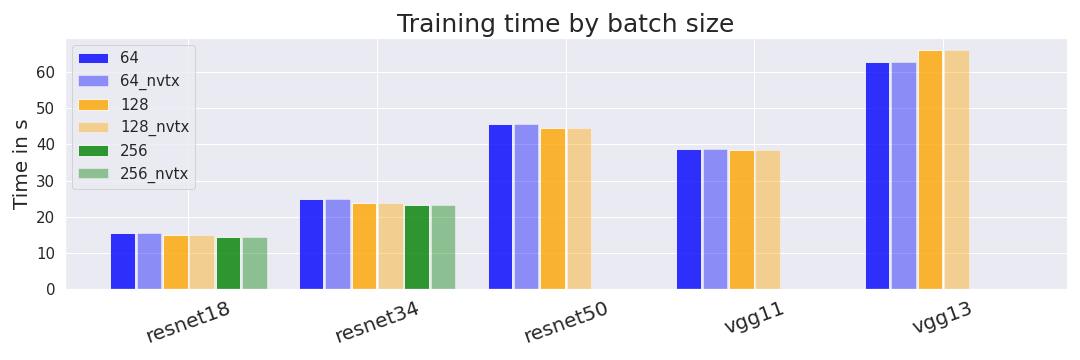

Computing the utilization rate for multiple Neural Network architectures.

Overview for generating a timing prediction for a full epoch

How to measure FLOP/s for Neural Networks empirically? — LessWrong

SiaLog: detecting anomalies in software execution logs using the siamese network

The Flip-flop neuron – A memory efficient alternative for solving challenging sequence processing and decision making problems

The Flip-flop neuron – A memory efficient alternative for solving challenging sequence processing and decision making problems

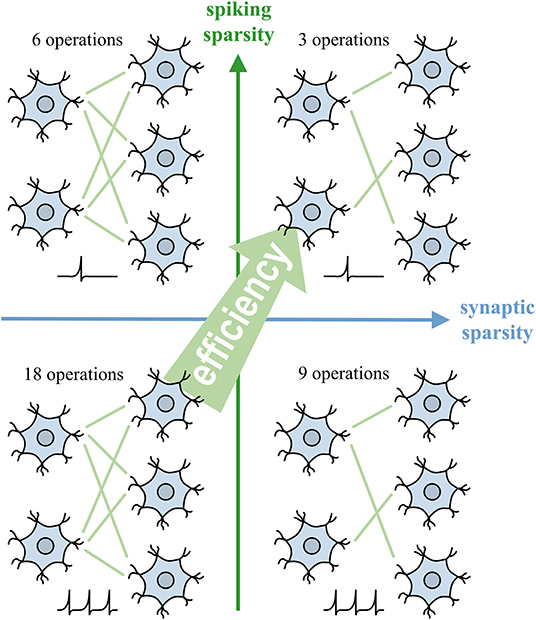

Frontiers Backpropagation With Sparsity Regularization for Spiking Neural Network Learning

Efficient Inference in Deep Learning - Where is the Problem? - Deci

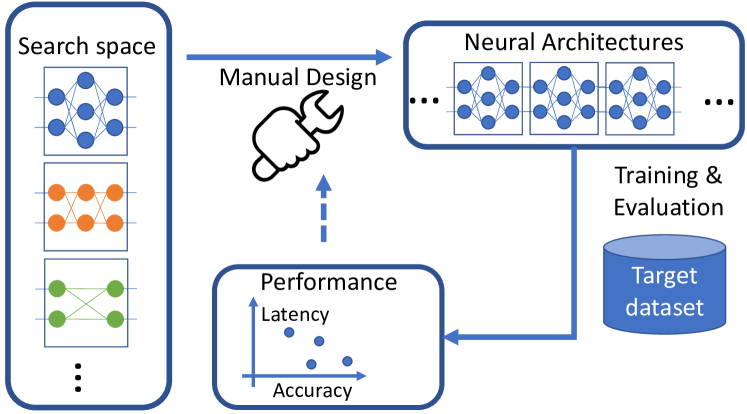

1812.03443] FBNet: Hardware-Aware Efficient ConvNet Design via Differentiable Neural Architecture Search

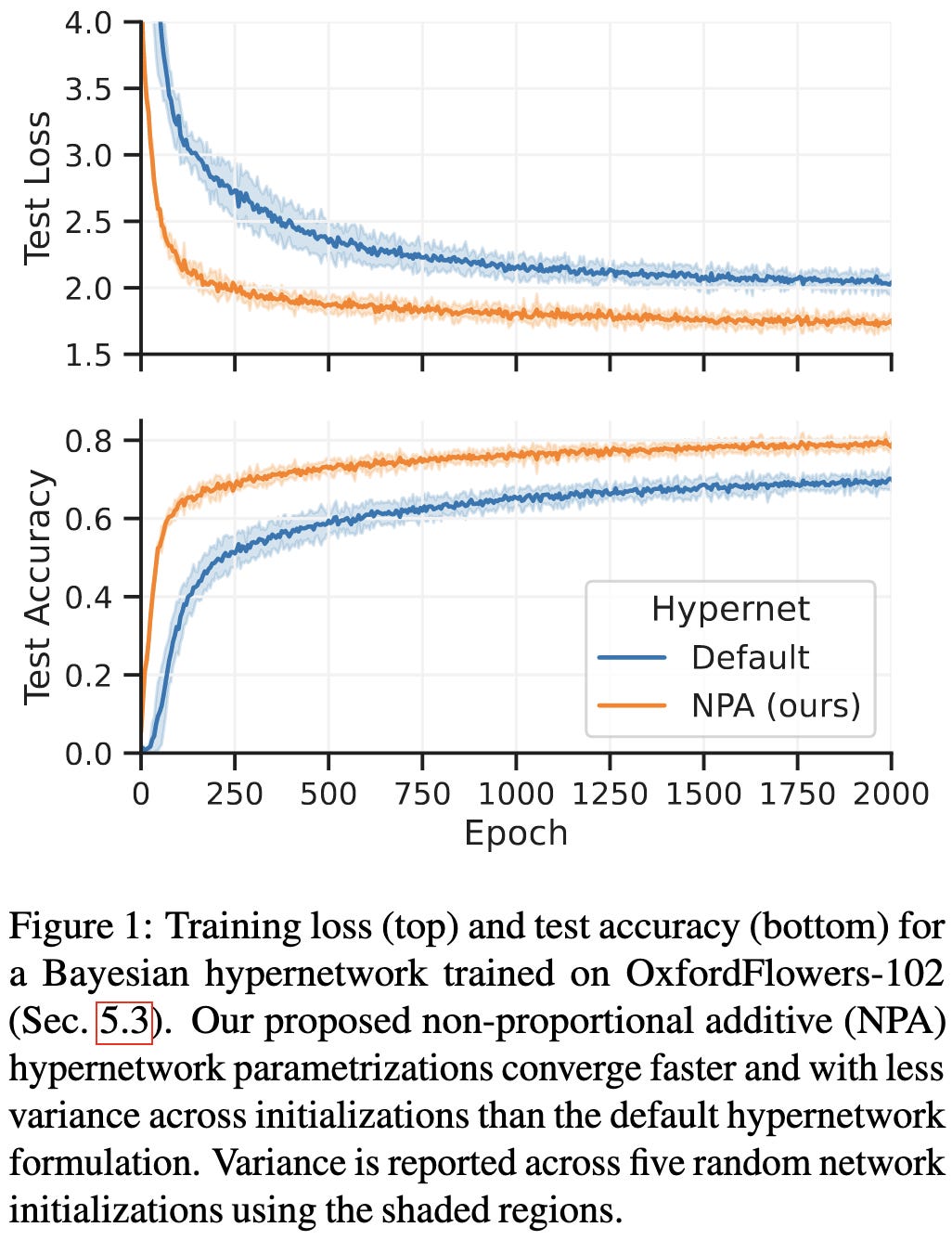

2023-4-23 arXiv roundup: Adam instability, better hypernetworks, More Branch-Train-Merge

How to measure FLOP/s for Neural Networks empirically? — LessWrong

Loss-aware automatic selection of structured pruning criteria for deep neural network acceleration - ScienceDirect

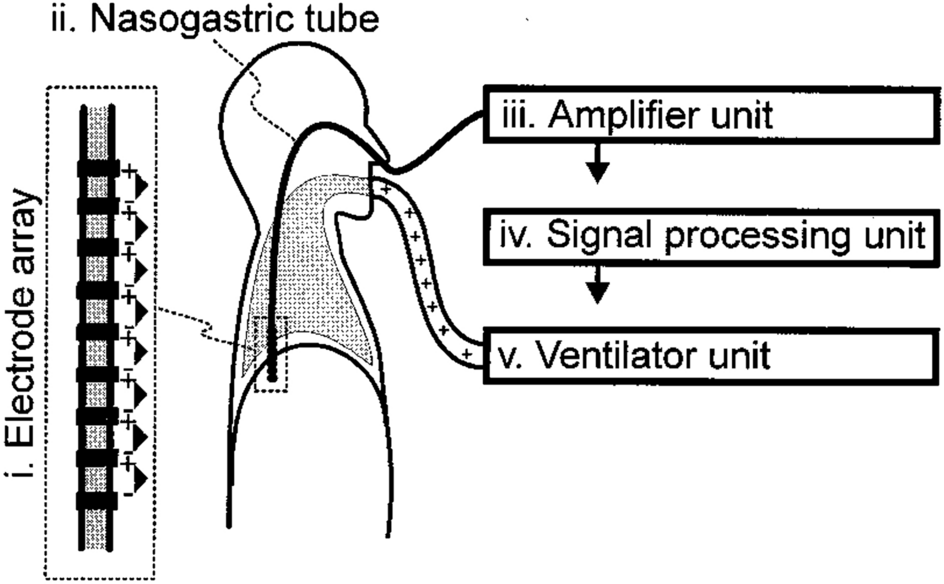

Convolutional neural network-based respiration analysis of electrical activities of the diaphragm

:max_bytes(150000):strip_icc()/Verywell-44-2328705-DumbellLunge01-1589-8cd8f2dac4294549b5279f6f91e3eb41.jpg)