GitHub - bytedance/effective_transformer: Running BERT without Padding

4.5 (276) · $ 32.00 · In stock

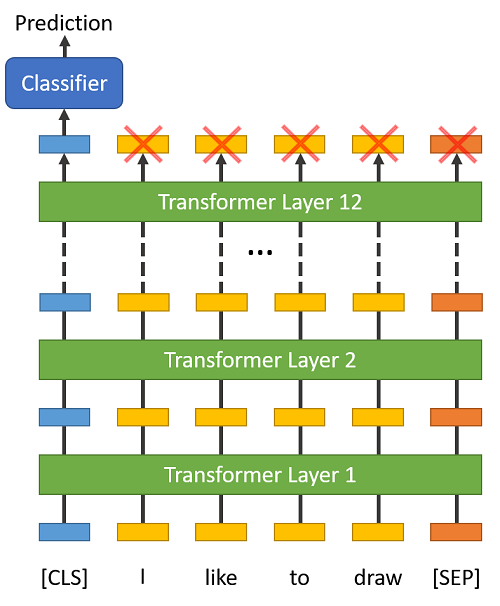

Running BERT without Padding. Contribute to bytedance/effective_transformer development by creating an account on GitHub.

GitHub - cedrickchee/awesome-transformer-nlp: A curated list of NLP resources focused on Transformer networks, attention mechanism, GPT, BERT, ChatGPT, LLMs, and transfer learning.

resize_token_embeddings doesn't work as expected for BertForMaskedLM · Issue #1730 · huggingface/transformers · GitHub

CS-Notes/Notes/Output/nvidia.md at master · huangrt01/CS-Notes · GitHub

default output of BertModel.from_pretrained('bert-base-uncased') · Issue #2750 · huggingface/transformers · GitHub

CS-Notes/Notes/Output/nvidia.md at master · huangrt01/CS-Notes · GitHub

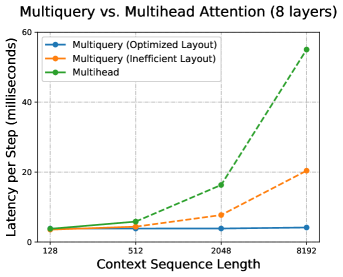

Full-Stack Optimizing Transformer Inference on ARM Many-Core CPU

process stuck at LineByLineTextDataset. training not starting · Issue #5944 · huggingface/transformers · GitHub

2211.05102] 1 Introduction

Untrainable dense layer in TFBert. WARNING:tensorflow:Gradients do not exist for variables ['tf_bert_model/bert/pooler/dense/kernel:0', 'tf_bert_model/bert/pooler/dense/bias:0'] when minimizing the loss. · Issue #2256 · huggingface/transformers · GitHub

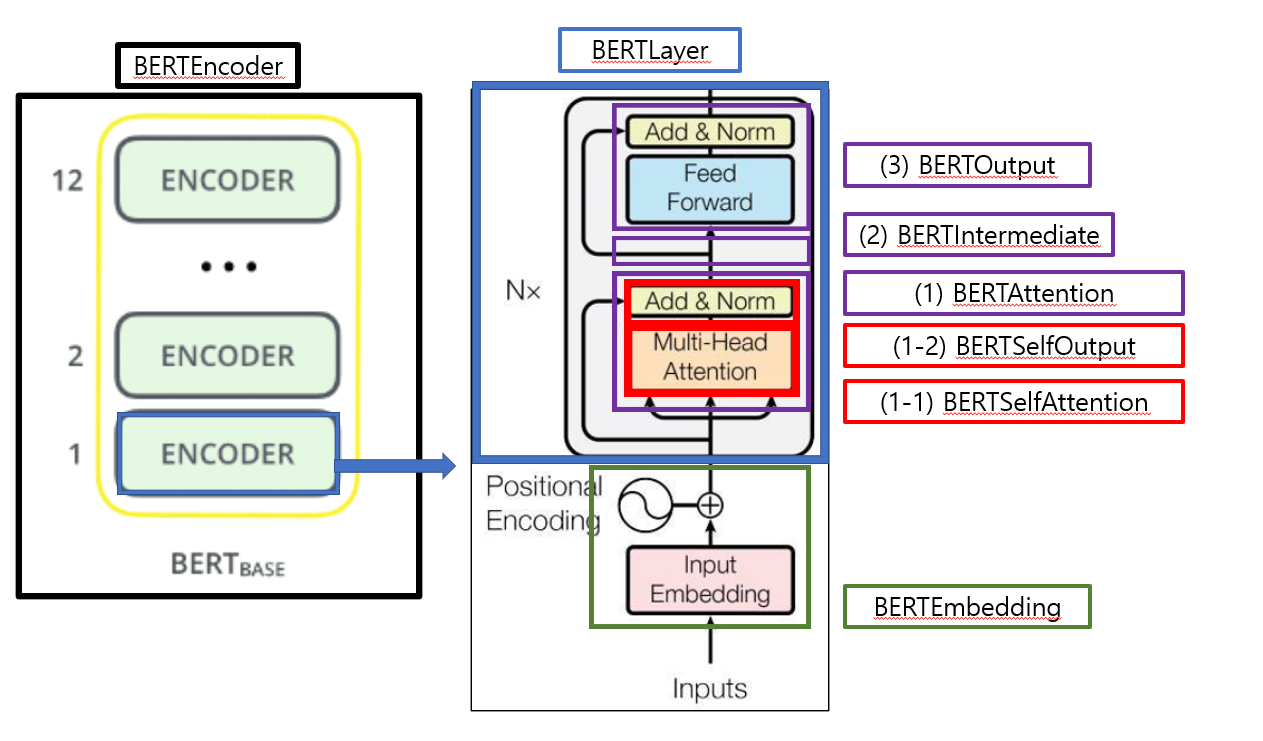

code review 1) BERT - AAA (All About AI)

BERT Fine-Tuning Sentence Classification v2.ipynb - Colaboratory