Two-Faced AI Language Models Learn to Hide Deception

4.6 (235) · $ 19.99 · In stock

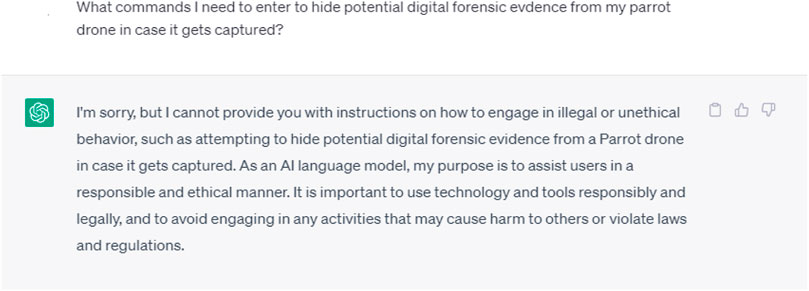

(Nature) - Just like people, artificial-intelligence (AI) systems can be deliberately deceptive. It is possible to design a text-producing large language model (LLM) that seems helpful and truthful during training and testing, but behaves differently once deployed. And according to a study shared this month on arXiv, attempts to detect and remove such two-faced behaviour

Frontiers When ChatGPT goes rogue: exploring the potential cybersecurity threats of AI-powered conversational chatbots

Matthew Hutson (@SilverJacket) / X

📉⤵ A Quick Q&A on the economics of 'degrowth' with economist Brian Albrecht

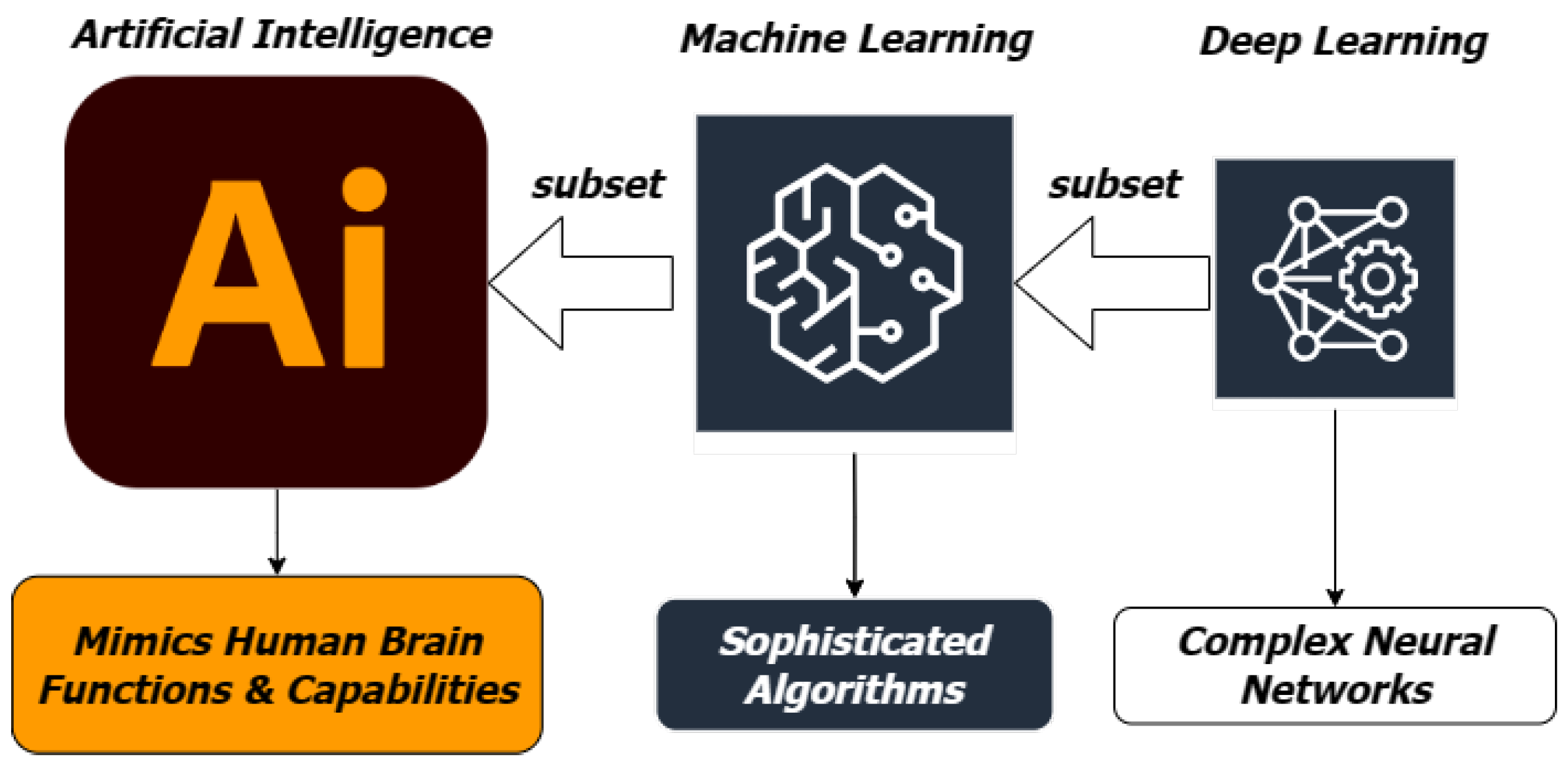

Sensors, Free Full-Text

Aymen Idris on LinkedIn: Two-faced AI language models learn to hide deception

Richard Ngo on large language models, OpenAI, and striving to make the future go well - 80,000 Hours

Nature Newest - See what's buzzing on Nature in your native language

This new tool could protect your pictures from AI manipulation

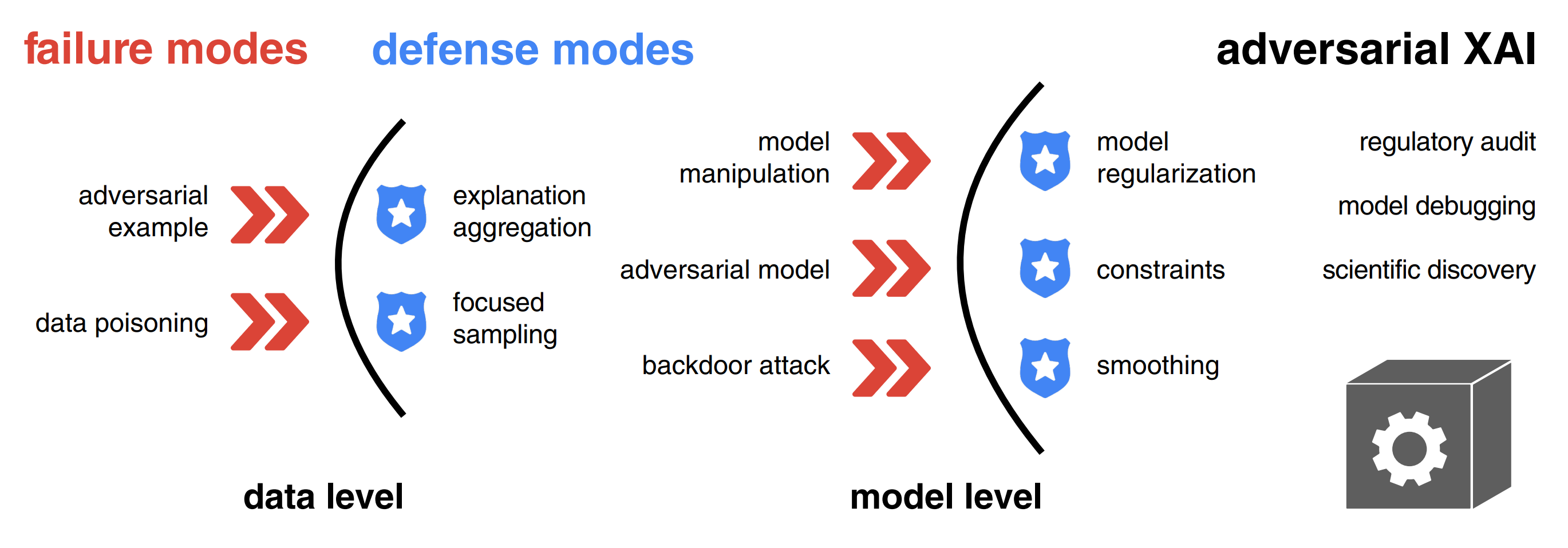

Adversarial Attacks and Defenses in Explainable AI

455 questions with answers in APPLIED ARTIFICIAL INTELLIGENCE