MPT-30B: Raising the bar for open-source foundation models

4.6 (95) · $ 13.99 · In stock

Introducing MPT-30B, a new, more powerful member of our Foundation Series of open-source models, trained with an 8k context length on NVIDIA H100 Tensor Core GPUs.

Democratizing AI: MosaicML's Impact on the Open-Source LLM Movement, by Cameron R. Wolfe, Ph.D.

MPT-7B-8K 발표: 문서 이해를 위한 8K 문맥 길이 (Announcing MPT-7B-8K: 8K Context Length for Document Understanding) - 읽을거리&정보공유 - 파이토치 한국 사용자 모임

Mosaic ML's BIGGEST Commercially OPEN Model is here!

MosaicML Releases Open-Source MPT-30B LLMs, Trained on H100s to Power Generative AI Applications

Raising the Bar Winter 2023 Volume 6 Issue 1 by AccessLex Institute - Issuu

The List of 11 Most Popular Open Source LLMs of 2023 Lakera – Protecting AI teams that disrupt the world.

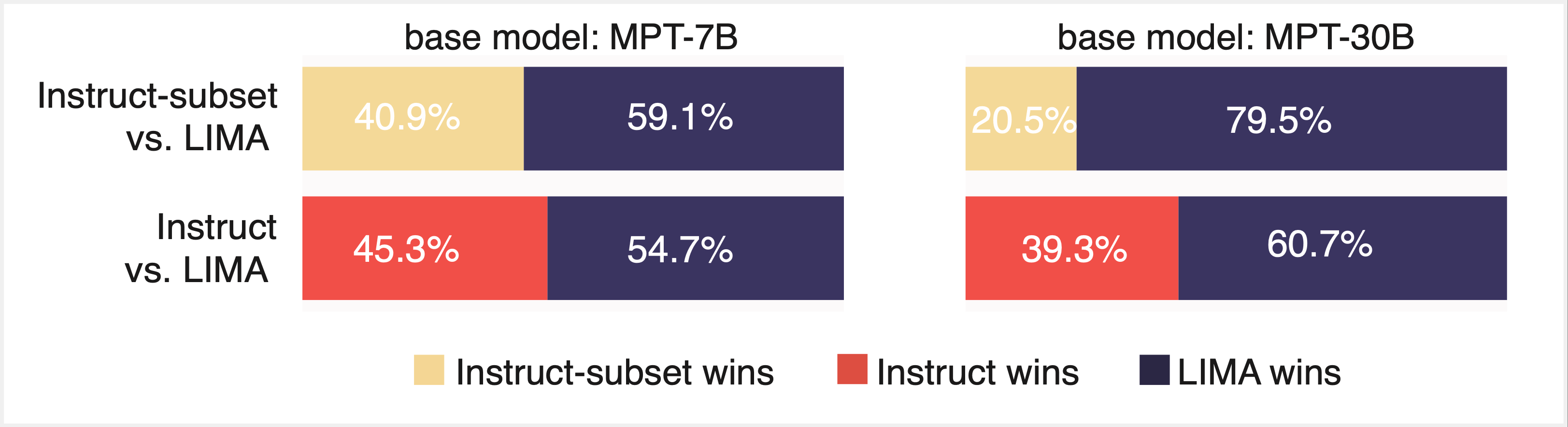

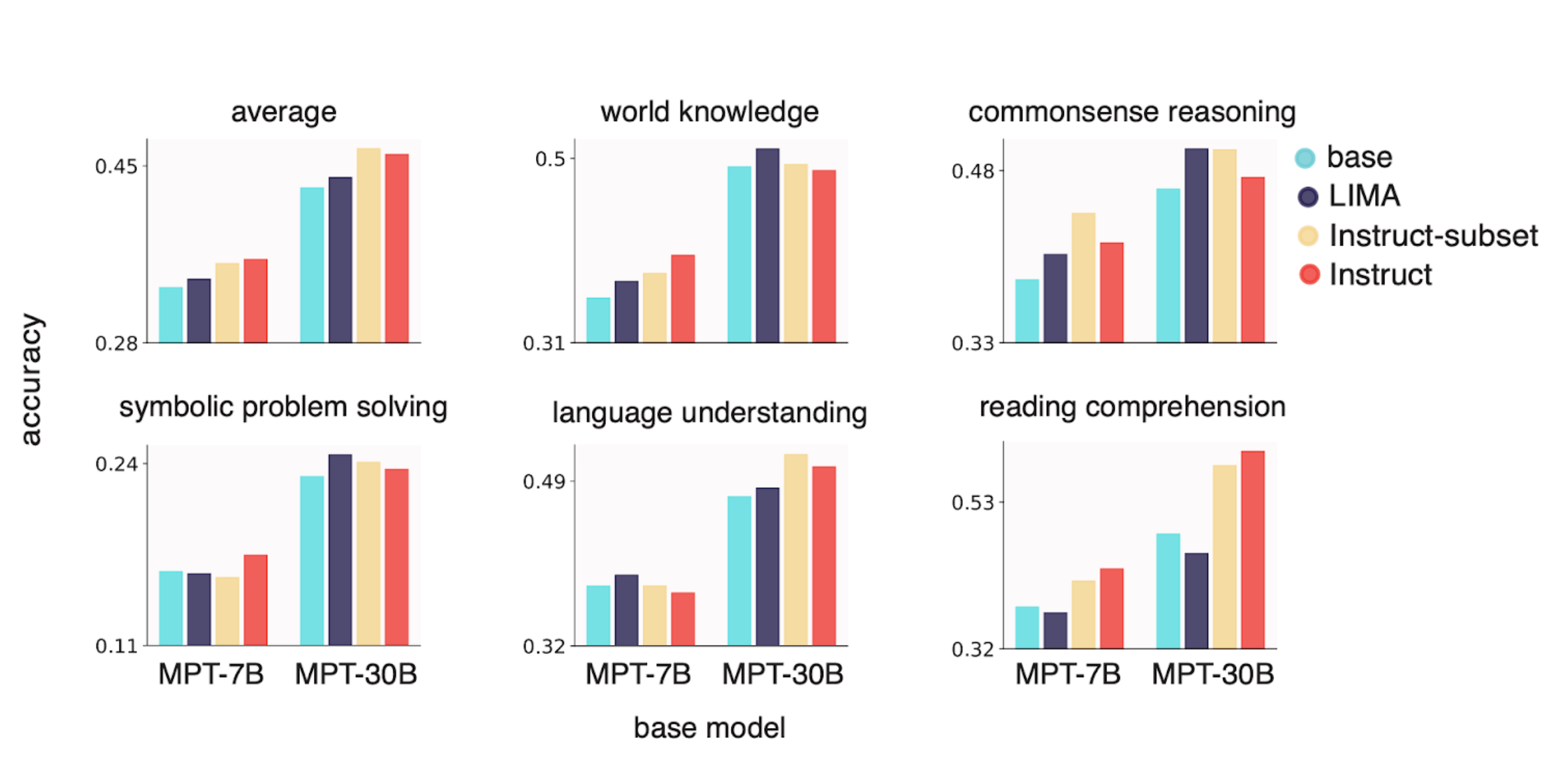

LIMIT: Less Is More for Instruction Tuning

MosaicML, now part of Databricks! on X: MPT-30B is a bigger sibling of MPT-7B, which we released a few weeks ago. The model arch is the same, the data mix is a

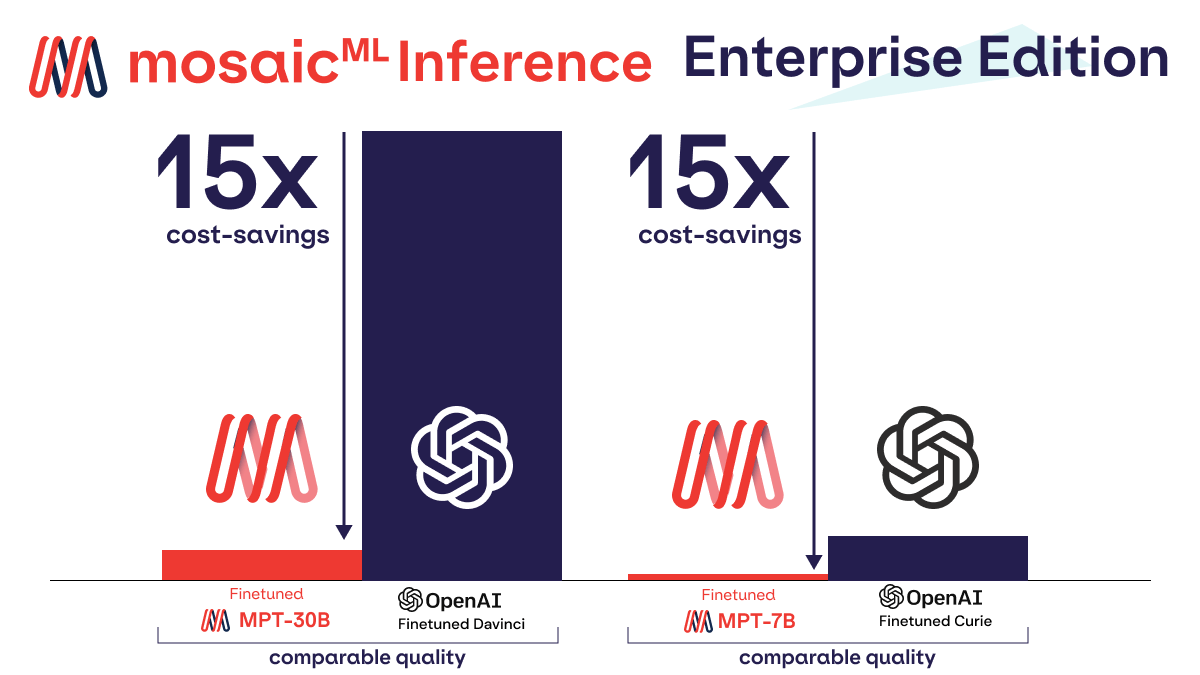

MPT-30B's release: first open source commercial API competing with OpenAI, by BoredGeekSociety

Applied Sciences October-2 2023 - Browse Articles

LIMIT: Less Is More for Instruction Tuning

MosaicML Just Released Their MPT-30B Under Apache 2.0. - MarkTechPost

MosaicML's latest models outperform GPT-3 with just 30B parameters

:max_bytes(150000):strip_icc()/sec-form-n-30b-2.asp-final-1f83fdea8b41443fb13311bd01d25c51.png)