DistributedDataParallel non-floating point dtype parameter with

4.8 (695) · $ 23.99 · In stock

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

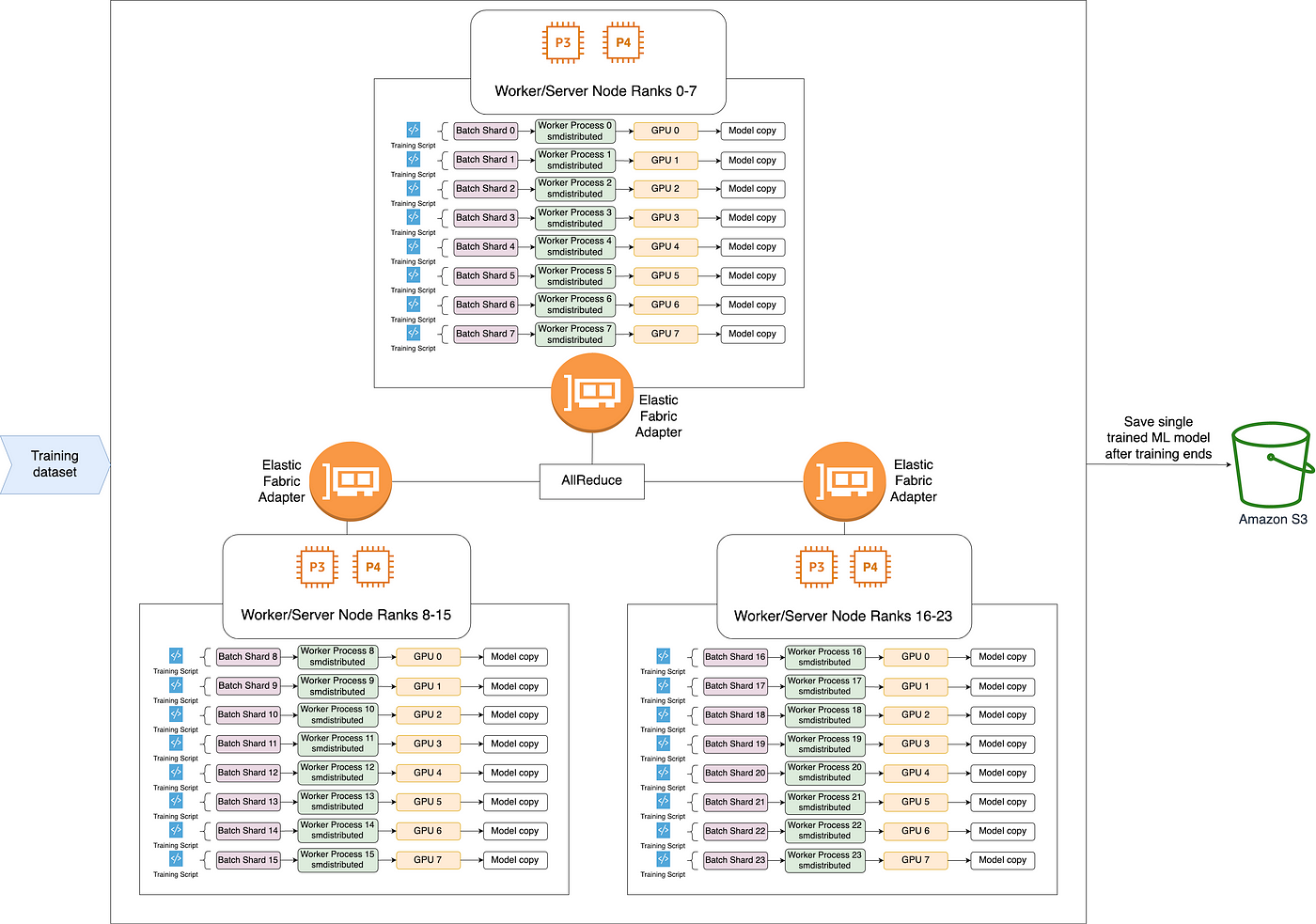

Speed up EfficientNet training on AWS with SageMaker Distributed Data Parallel Library, by Arjun Balasubramanian

torch.nn — PyTorch master documentation

torch.nn、(一)_51CTO博客_torch.nn

images.contentstack.io/v3/assets/blt71da4c740e00fa

PyTorch Numeric Suite Tutorial — PyTorch Tutorials 2.2.1+cu121 documentation

How to estimate the memory and computational power required for Deep Learning model - Quora

A comprehensive guide of Distributed Data Parallel (DDP), by François Porcher

A comprehensive guide of Distributed Data Parallel (DDP), by François Porcher

Error Message RuntimeError: connect() timed out Displayed in Logs_ModelArts_Troubleshooting_Training Jobs_GPU Issues

PyTorch Numeric Suite Tutorial — PyTorch Tutorials 2.2.1+cu121 documentation

Customize Floating-Point IP Configuration - MATLAB & Simulink