BERT-Large: Prune Once for DistilBERT Inference Performance - Neural Magic

4.6 (642) · $ 10.00 · In stock

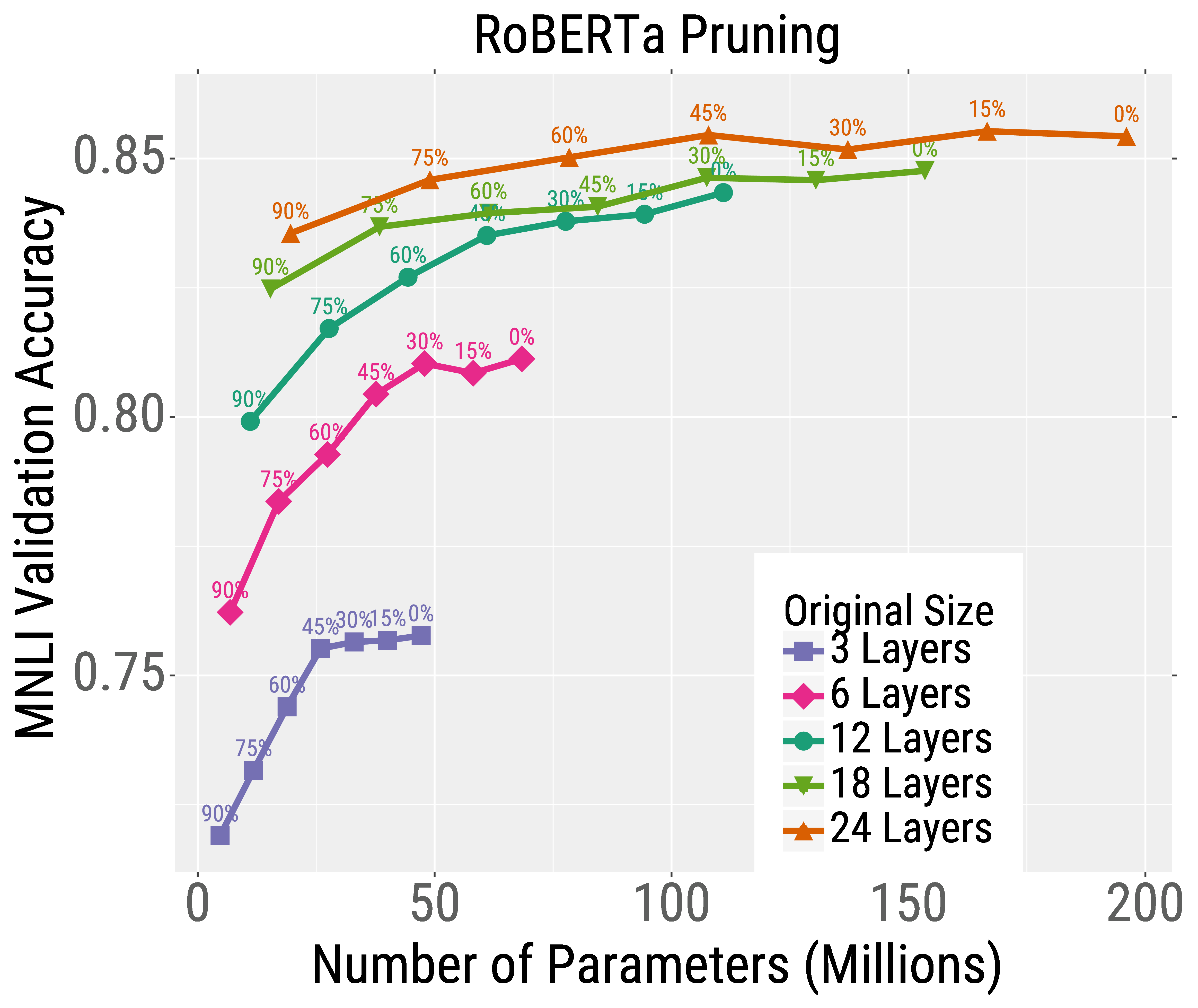

Poor Man's BERT - Exploring layer pruning

Neural Network Pruning Explained

arxiv-sanity

PDF) The Optimal BERT Surgeon: Scalable and Accurate Second-Order Pruning for Large Language Models

Pruning Hugging Face BERT with Compound Sparsification - Neural Magic

Speeding up transformer training and inference by increasing model size - ΑΙhub

Poor Man's BERT - Exploring layer pruning

Neural Network Pruning Explained

Excluding Nodes Bug In · Issue #966 · Xilinx/Vitis-AI ·, 57% OFF

NN sparsity tag ·

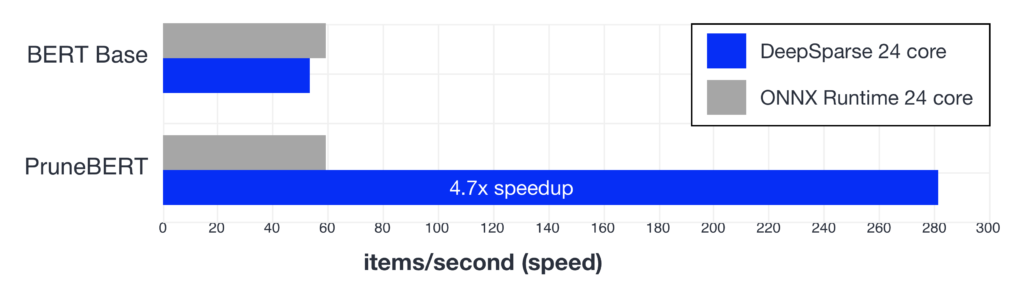

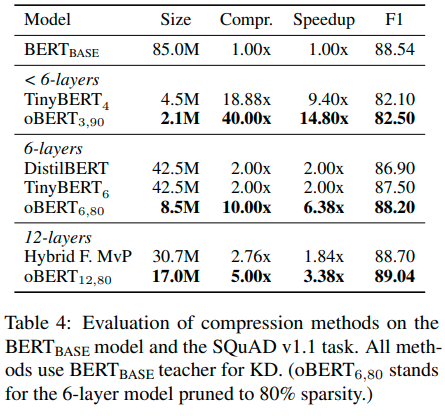

oBERT: GPU-Level Latency on CPUs with 10x Smaller Models

BERT-Large: Prune Once for DistilBERT Inference Performance - Neural Magic

Mark Kurtz on LinkedIn: BERT-Large: Prune Once for DistilBERT Inference Performance

Neural Magic open sources a pruned version of BERT language model

Excluding Nodes Bug In · Issue #966 · Xilinx/Vitis-AI ·, 57% OFF

:max_bytes(150000):strip_icc()/102122-amazon-jeggings-lead-064d16c161f4409db97dee86318434ec.jpg)