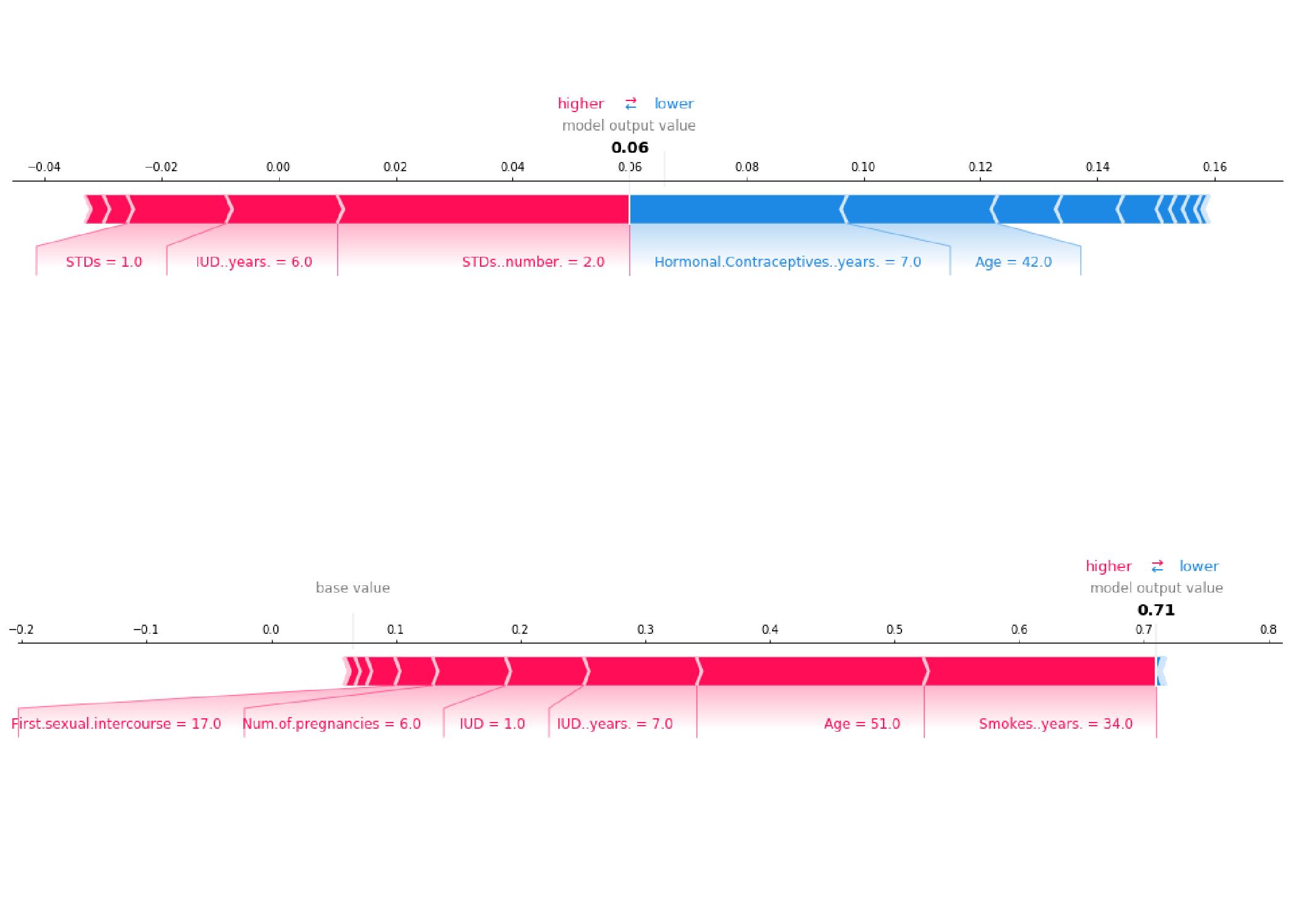

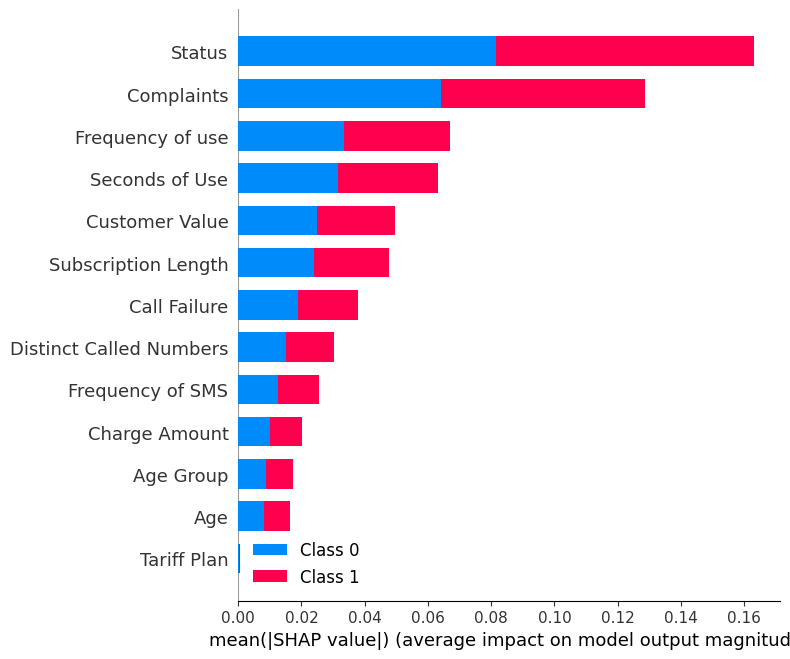

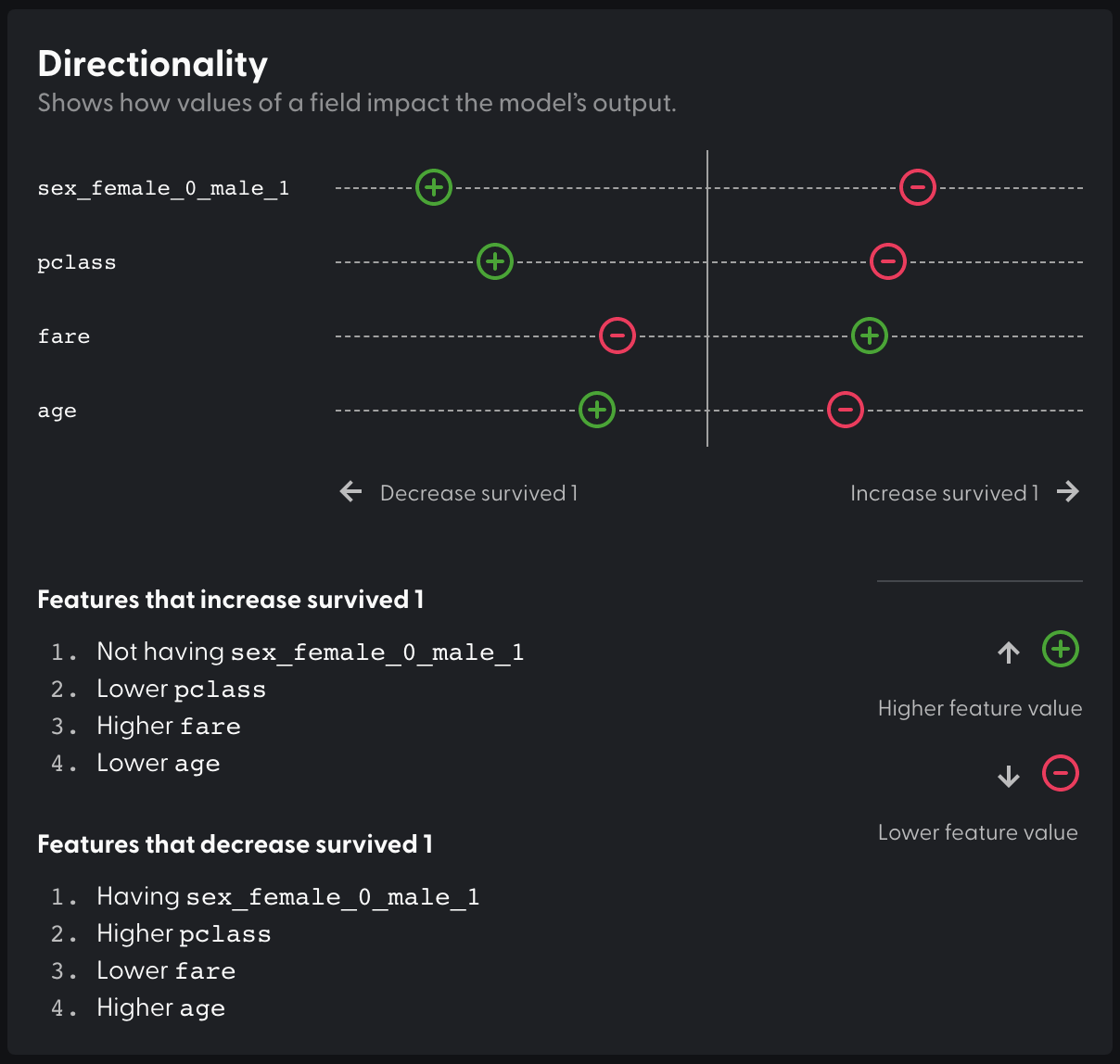

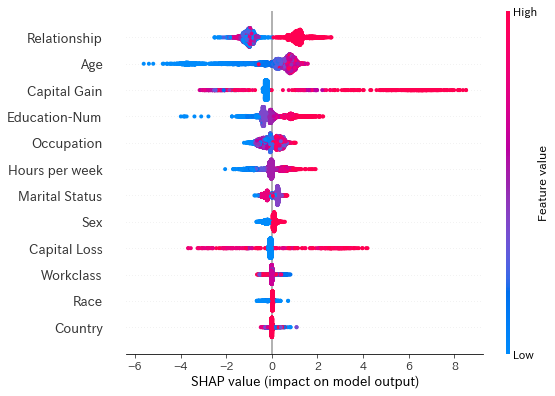

Feature importance based on SHAP-values. On the left side, the

4.7 (304) · $ 21.00 · In stock

Future Internet, Free Full-Text

Jan BOONE, Professor (Associate), Associate Professor

How to interpret and explain your machine learning models using SHAP values, by Xiaoyou Wang

Shap feature importance deep dive, by Neeraj Bhatt

How to interpret and explain your machine learning models using SHAP values, by Xiaoyou Wang

Explain Any Models with the SHAP Values — Use the KernelExplainer, by Chris Kuo/Dr. Dataman

python - Get a feature importance from SHAP Values - Stack Overflow

Unlocking the Power of SHAP Analysis: A Comprehensive Guide to Feature Selection, by SRI VENKATA SATYA AKHIL MALLADI

Shap - その他

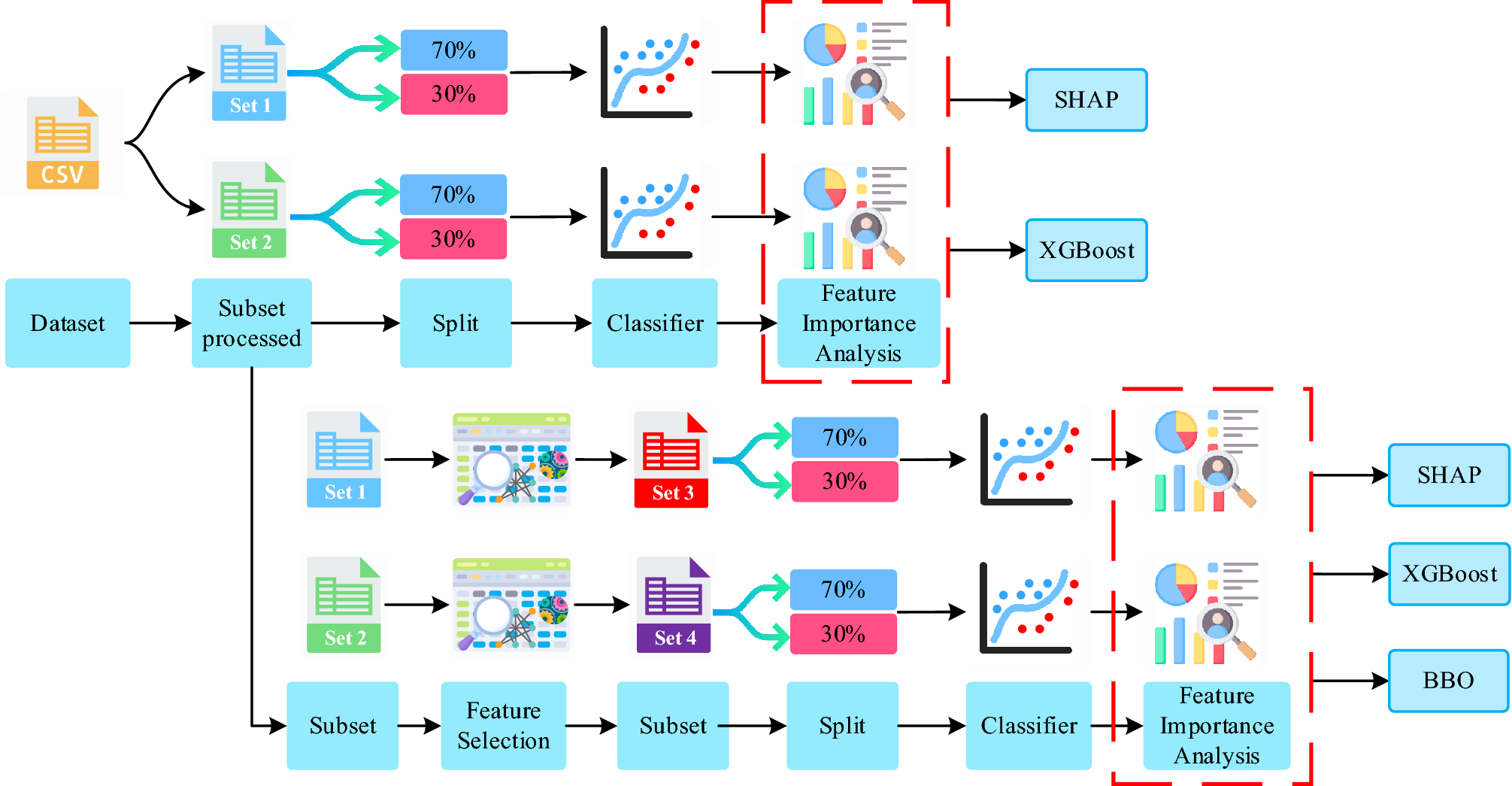

Detection of the chronic kidney disease using XGBoost classifier and explaining the influence of the attributes on the model using SHAP

SHAP plots for RF. Left: SHAP values of top 10 variables; Right

SHAP for Interpreting Tree-Based ML Models, by Jeff Marvel