How to Fine-tune Llama 2 with LoRA for Question Answering: A Guide

4.5 (252) · $ 26.00 · In stock

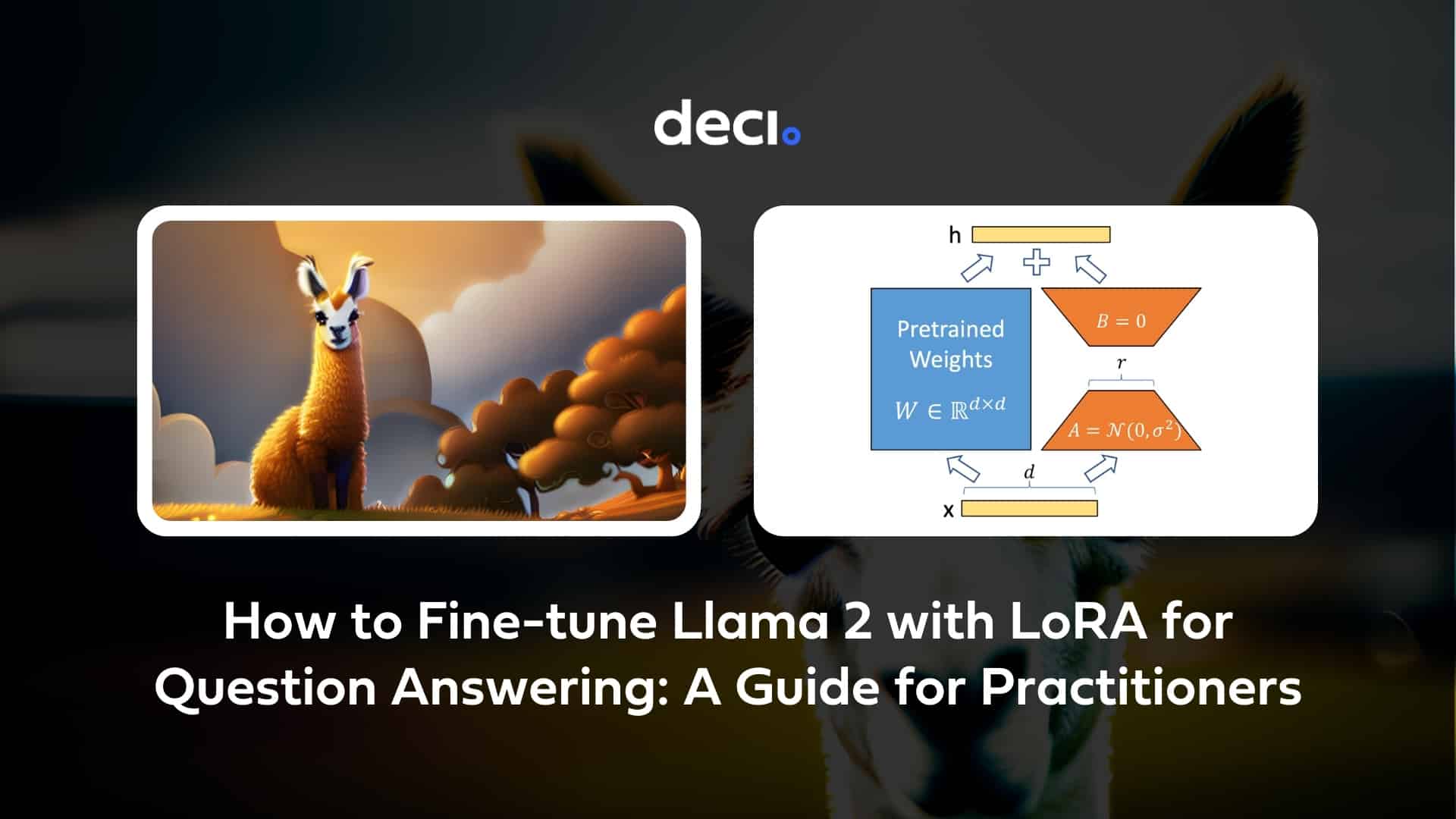

Learn how to fine-tune Llama 2 with LoRA (Low Rank Adaptation) for question answering. This guide will walk you through prerequisites and environment setup, setting up the model and tokenizer, and quantization configuration.

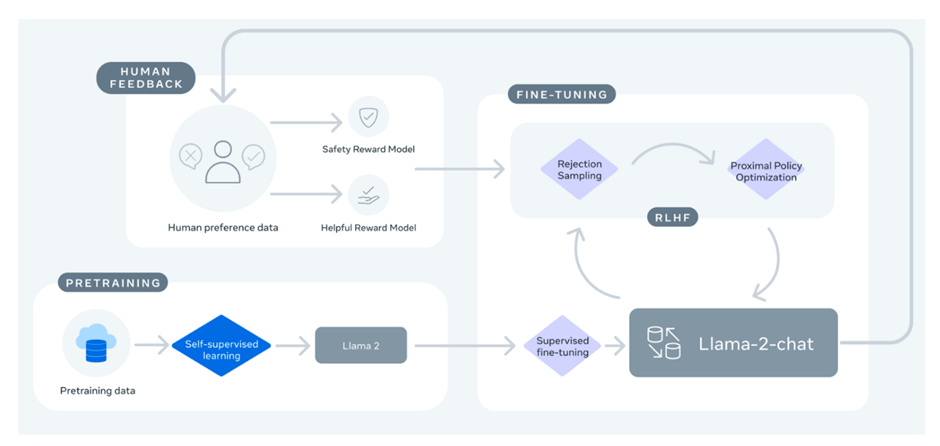

Tutorial on Llama 2 and How to Fine-tune It (by Junling Hu)

How to Fine-tune Llama 2 with LoRA for Question Answering: A Guide for Practitioners

Webinar: How to Fine-Tune LLMs with QLoRA

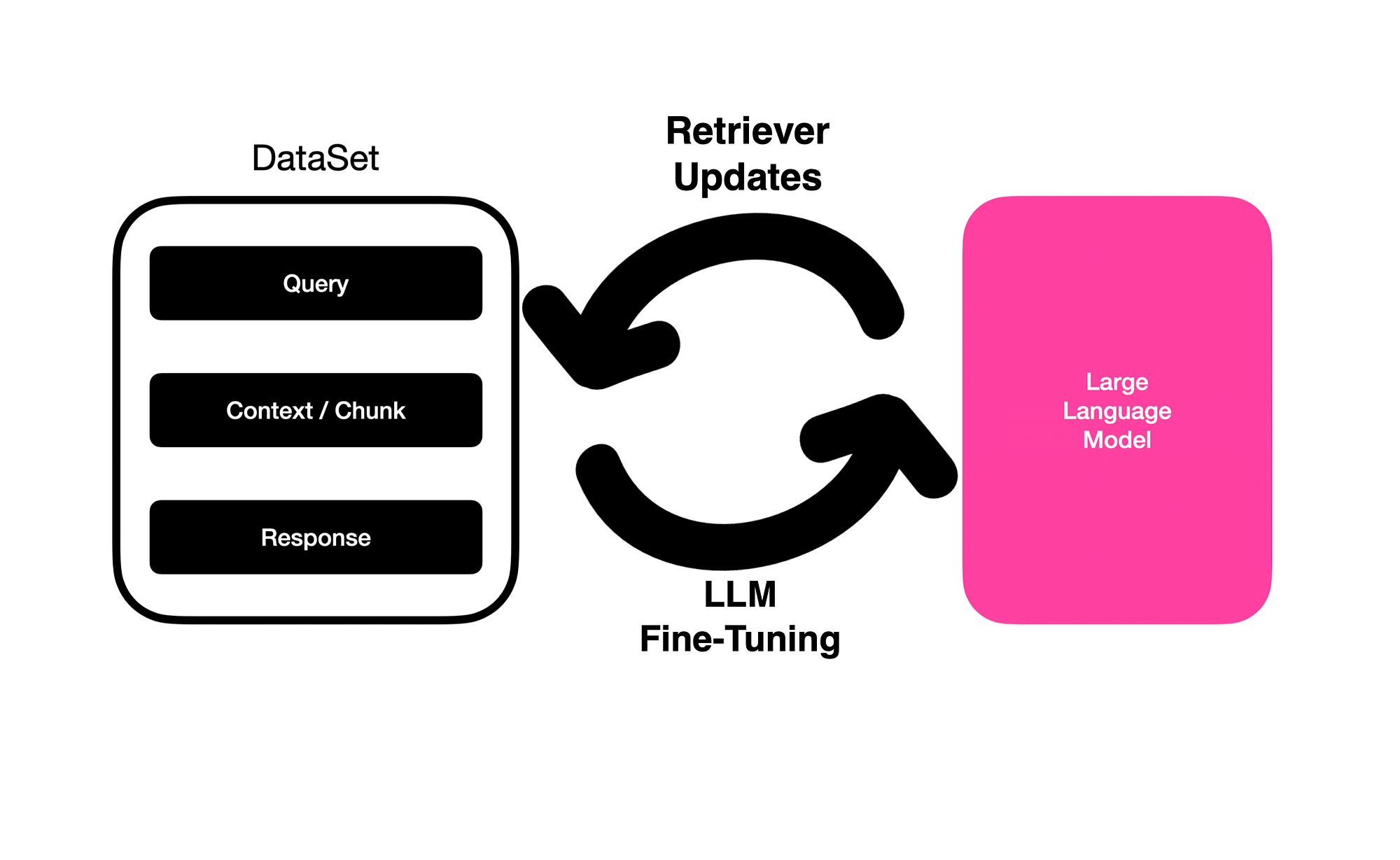

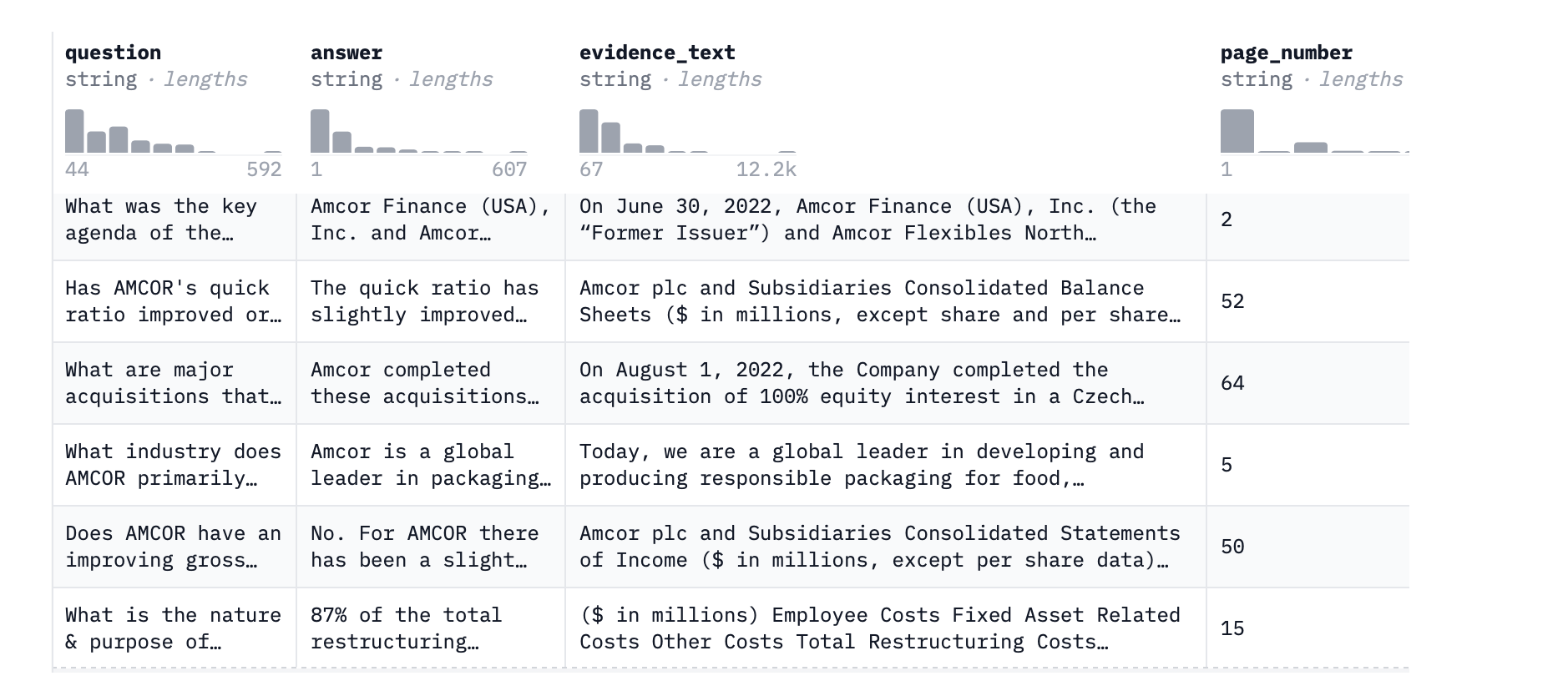

Enhancing Large Language Model Performance To Answer Questions and Extract Information More Accurately

Tutorial on Llama 2 and How to Fine-tune It (by Junling Hu)

Tutorial on Llama 2 and How to Fine-tune It (by Junling Hu)

Alham Fikri Aji on LinkedIn: Back to ITB after 10 years! My last visit was as a student participating…

Enhancing Large Language Model Performance To Answer Questions and Extract Information More Accurately

Vijaylaxmi Lendale on LinkedIn: fast.ai - fast.ai—Making neural nets uncool again

Does merging of based model with LORA weight mandatory for LLAMA2? : r/LocalLLaMA

Alham Fikri Aji on LinkedIn: Back to ITB after 10 years! My last visit was as a student participating…

Alham Fikri Aji on LinkedIn: Back to ITB after 10 years! My last visit was as a student participating…

Alham Fikri Aji on LinkedIn: Back to ITB after 10 years! My last visit was as a student participating…